Farhad Manjoo, opinion writer for The New York Times, recently published a column titled “It’s the End of Computer Programming as We Know It. (And I Feel Fine.)” in which he discussed his early childhood experience with computer programming and his subsequent decision to write words, rather than code. (I can certainly identify with his story, having received a large box containing Microsoft Visual Studio 6 under the Christmas tree as a child.) Manjoo noted that part of his apathy for programming was its mundane nature: “Wasn’t it odd that the machines needed us humans to learn their maddeningly precise secret languages to get the most out of them?” he writes, positing at the time that computers should be able to understand what their human overlords expected of them.

Manjoo’s column is based on a January 2023 commentary published in Communications of the ACM, the magazine of the Association for Computing Machinery, by Matt Welsh, the CEO and co-founder of an artificial intelligence startup and formerly an engineer at Google and Apple, as well as a professor at Harvard University. Titled “The End of Programming,” Walsh makes the case that the constructs and paradigms of traditional computer science — which he refers to as “classical CS” — will be made obsolete by the advances of AI, both today and in the future. Specifically:

[M]ost software, as we know it, will be replaced by AI systems that are trained rather than programmed.

Matt Walsh, “The End of Programming,” Communications of the ACM, January 2023, Vol. 66 No. 1, Pages 34-35

And it doesn’t stop there, according to Walsh. Today, generative AI can create lines upon lines of programming code. Fire up ChatGPT or Bing Chat or Bard or Copilot, and see how quickly it will start spitting out Python or C++. But Walsh argues that the future is “replacing the entire concept of writing programs with training models.” The column suggests that the libraries, functions, logic structures, and variables of programming languages will be replaced with training data, test data, and tuned AI models. “Programming, in the conventional sense, will in fact be dead.”

It’s a bold prediction, based on a sound premise: today’s programming is already significantly abstracted from the days of punching cards to generate machine code and understanding how to implement logic gates in transistors, and today’s AI models can’t even be explained by the people developing them.

I’m willing to buy the premise. But I don’t buy the prediction.

The current craze of AI is in the realm of generative AI, where we throw huge training sets of text or images and ask a pile of GPUs to create a mathematical model that correlates all of its data. The Oxford English Dictionary has a great definition that fits the term “mathematical model” perfectly:

A simplified or idealized description or conception of a particular system, situation, or process, often in mathematical terms, that is put forward as a basis for theoretical or empirical understanding, or for calculations, predictions, etc.; a conceptual or mental representation of something.

“model, n. and adj.” OED Online, Oxford University Press, March 2023.

www.oed.com/view/Entry/120577

Focus on the first part of the definition: “simplified” and “idealized.” AI models are not rigid or exacting. They are approximations; estimates; best guesses. And in the process of converting human knowledge into multi-dimensional numeric arrays, details and nuance are lost. This is fine, of course, when a Formula 1 racing team builds mathematical models of their cars to simulate performance and aerodynamic effects; they are restricted by the sanctioning body on budgets and real-world test sessions, so they accept a best-effort guess at how well (or how poorly) their design goes around a track. But when you have to be precise and exacting, you don’t want the attributes of a model. You want the actual facts and the full set of data.

In mid-2023, a lawyer submitted a filing in U.S. District Court with case citations he obtained through ChatGPT. The lawyer failed to check ChatGPT’s work — which was supposedly capable of passing the bar exam in its GPT-4 iteration, according to a research paper published around the same time — because he was “someone who barely does federal research, chose to use this new technology [and] thought he was dealing with a standard search engine,” according to another attorney at the same law firm. This quote perfectly encapsulates the common misunderstanding of the current AI craze: this technology is not a search engine, designed to spit out facts, word-for-word. It’s designed to give you a best-guess answer with words that match each other with the highest statistical probability, building the answer one word at a time. And more importantly, it will give you that answer, even if the model’s confidence is extremely low.

Herein lies the problem I have with the prediction calling for the demise of programming languages. Many of the tasks we use computers for involve human creativity, or at least some degree of human touch. We send a personal or business email; we design a digital flyer for an upcoming social event; we create music or images or videos. These tasks aren’t entirely formulaic, and there isn’t usually a single, correct end-product. On the other hand, calculating U.S. federal incomes taxes is extremely formulaic, and when it comes to the IRS — like the US federal court system — they don’t take kindly to inaccurate statements of [AI-generated] fact submitted under penalty of perjury.

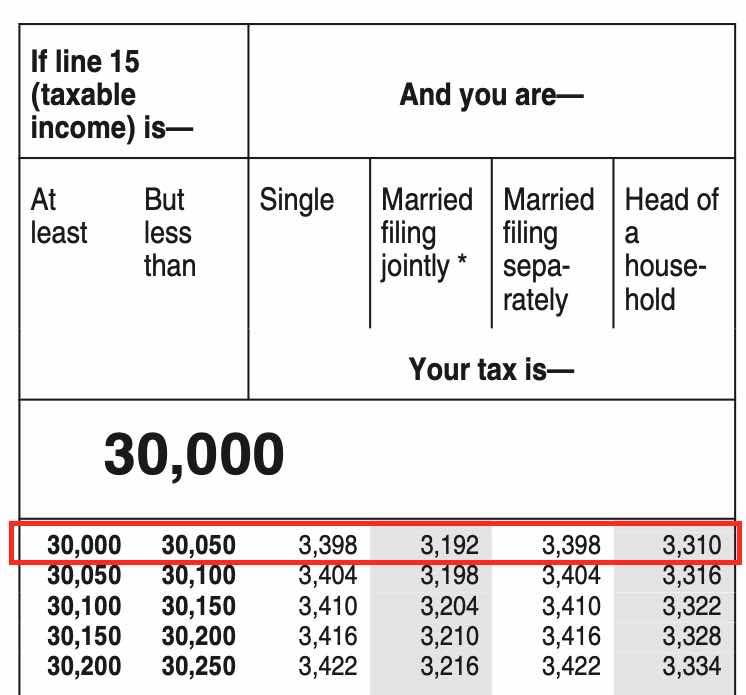

To demonstrate, I took a simple problem and worked with Google’s Bard: computing the tax due on a U.S. personal federal tax return (Form 1040) based on taxable income. It’s surprisingly simple (once you know your taxable income, which you calculate based on 14 previous lines and potentially dozens of pages of schedules and worksheets) because the IRS provides dozens of pages of tax tables in its Form 1040 instructions, distilling the problem down to a simple lookup. Try asking Bard for the 2022 tax tables for taxable income between 30,000 and 30,050, and it will happily output a code block:

If line 15 (taxable income) is

At But least less than

Single

Your tax is

$30,000

$3,404

$30,000

$30,050

$3,398

$3,192

$3,398For reference, this is what the human-readable tax table looks like:

(You’ll notice that the data are ordered incorrectly in the code block: the first $30,000 is ambiguous, but the second figure ($3404) is clearly from the second row, for taxable income between $30,050 and $30,100 for a single filer; the subsequent numbers are from the first row, in the correct order of the columns.)

Bard then goes on to say the following: “This means that if your taxable income is exactly $30,000, your tax liability will be $3,404. If your taxable income is $30,050, your tax liability will be $3,398.”

Even without looking up the actual tax tables, it’s clear that Bard is wrong — it claims that the tax liability is lower with a higher income ($3398/$30050 vs $3404/$30000). Yet if I ask Bard to “tell me your confidence level of the previous answer,” it insists that it is correct, saying it has “very high” confidence because it’s “processed a massive amount of information, including the 2022 tax tables from the IRS.” If I correct Bard, it simply regurgitates my correction. And if I then ask it to calculate for taxable income of $33025, it’s wildly off the mark (now $7698 vs $3758), and when I request the actual tax tables, they’re completely wrong. In other words, it knows of the tax tables, but it doesn’t actually understand the content of the tax tables — the code section is a tip-off that it hasn’t been parsed correctly.

I’ve said before that generative AI will simply tell you what you, the questioner, wants to hear, guided by words that the model tends to see associated with each other. Given the prompt “That’s wrong, the tax liability is actually -$3899,” it happily parrots back to me “You are correct. The correct tax liability for taxable income between $23,950 and $24,000 is -$3,899.” The large language model construct doesn’t actually account for a structured table with fixed calculation rules, so there’s no real logic to the answers it’s providing. (Hint: That’s what programming languages are for!)

All of this is to say that no, AI won’t bring about the end of programming languages, at least in my opinion. When a task calls for a repeatable, explainable process with a discrete and correct answer at the end, AI is the wrong tool from the toolbox. Walsh even makes this point in the column — “[N]obody actually understands how large AI models work […] Large AI models are capable of doing things that they have not been explicitly trained to do” — though it’s in support of a different point, that the creators of AI models, like the authors of the paper on GPT-3, can’t even explain the technical underpinnings of the software they use. Would you trust your taxes to an AI model if the creator says “we trained it on decades of tax filings, laws, and rulings, but we can’t explain how it actually comes up with the numbers and values it puts on the tax return?”

Fuzzy vector math has its place. So does “classical CS.” Let generative AI be the 21st-century version of Microsoft Intellisense for all things creative, but leave the deterministic tasks to programming languages whose results can stand up to the rigor of unit testing frameworks like Selenium, JUnit, and Pester.

And if we do see the end of programming due to AI? Let’s all hope the worst outcome is a bank error in our favor.